Bachelor thesis

A Concept for the Future of Multimodal Voice User Interfaces and Voice Assistants

The following bachelor thesis deals with the future and development of voice user interfaces. It shows how VUIs will become more relevant in the future and how they will function. "A Concept for the Future of Multi-Modal Voice User Interfaces and Voice Assistants" presents an interaction system that shows how voice user interfaces (VUI) and voice assistants (VA) can be integrated into the work flow on the computer and on the Internet in the future. The project presents the user with voice user interfaces outside the context of “Home”, as it currently exists in the form of Alexa and Google Home. Unlike existing voice user interfaces, our system operates in a new subject area and connects programs and services across the board.

“By 2020, the average person will have more conversations with bots than with their spouse..”

Exhibition stand.

Why Voice User Interfaces?

Language is one of the most natural and human forms of communication, with which a lot of information can be transmitted faster and easier. We are sure that voice user interfaces will play a very dominant role in the future, but we only associate them with voice assistants such as Amazon Alexa, Google Assistant or Apple Siri. However, these language assistants are currently not able to solve complex tasks and therefore remain as toys in the living room. The first primitive language computers were almost 60 years ago, but only in the last few years have there been significant advances in deep learning, an area of artificial intelligence, and in natural language processing. We have therefore put our concept focus on the next few years in order to be able to assume that during this time the artificial intelligence and language processing will be a lot further developed than we now recognize from the current language assistants.

How does a VUI become a paradigm?

The first apps came along with the smartphone. These changed our access to information considerably. Before that, you mainly worked on a desktop, which was also used for all Internet searches. However, with the advent of mobile applications, the handling of devices changed. Nowadays people edit pictures on smartphones, write emails and do research on the go.

So how can and must a voice user interface look so that the voice interaction is comparably functional and applicable? We no longer see the voice assistants as a pure voice user interface in the form of a smart speaker, but above all as multimodal and firmly integrated into devices. With multimodal we mean the combination of different forms of interaction, for example on a computer with a mouse and keyboard.

But how must such a voice user interface and a voice assistant be structured so that it can function in these forms?

Parameters for a voice user interface

Through our research, we were able to define various parameters and requirements for a good voice user interface. We are sure that a good VUI is only possible if it is firmly integrated in the system and can therefore access all devices and has a certain artificial intelligence.

1. Integration

The voice user interface must be firmly linked to the system so that it can act, for example, with other applications or interfaces, such as the graphical user interface. It needs access to all areas of the computer or network in which it is supposed to help the user.

2. Artificial intelligence

The AI is responsible for the independence and independent action of the voice user interface. In addition, the VUI can recognize the user's behavioral patterns and thus personalize itself on them. With the help of a distinctive AI, the VUI becomes more than just a "toy". In addition, there must be a basis for language processing, natural language processing, so that the VUI can react to more than just keywords.

Parameters for a voice user interface

In order to make voice assistant can become a really helpful interaction, we differentiate between the following two points in relation to the current concepts:

1. One Assistant

The assistant must be customizable and accessible on every device. The user uses an assistant for several areas of application. This also shows that the assistant must be a system. A distinction can be made here, however, whether it is the user's personal assistant in everyday life or a voice assistant for another person or company.

2. Context knowledge

Good contextual knowledge means that the assistant must have access to the data and the corresponding editing rights depending on the status of the AI. On the other hand, sensors play a major role. Depending on the environment in which the user is, different sensors must be attached in the room or on the corresponding device. A microphone and a loudspeaker are not sufficient for this requirement. The current situation would be comparable with a butler that everyone currently wants from a voice assistant, but who has no access and neither knows nor sees the user, nor can he communicate with him.

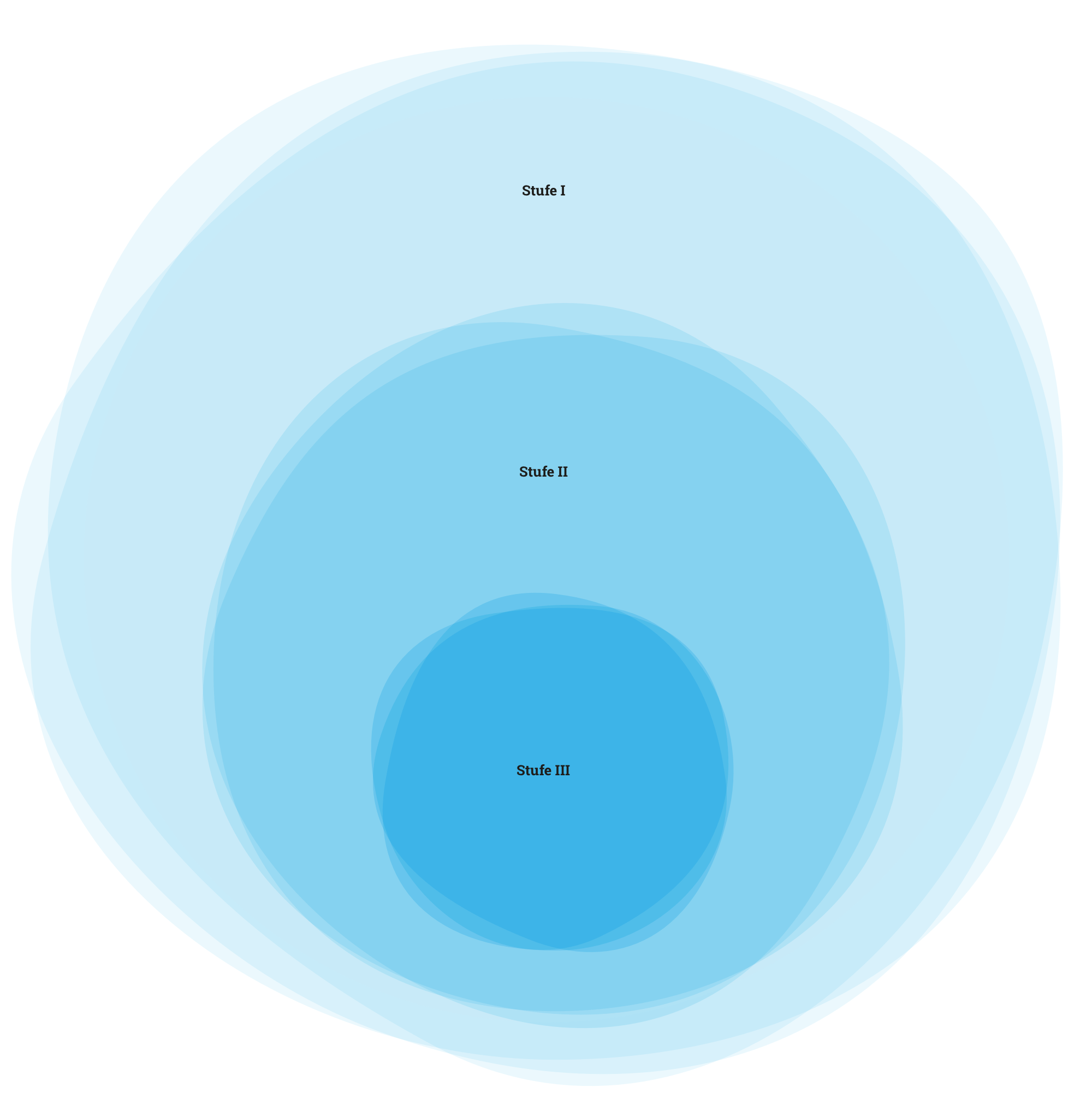

The stages of a voice assistant

Our research has also shown that a voice assistant can act in three stages. With each level, the artificial intelligence and contextual knowledge of the assistant increases, allowing it to carry out more complex tasks.

Stage I

External access: The Voice Assistant is firmly integrated in the system and can access files and applications, for example sending and opening files.

Stage II

Internal access: The assistant not only recognizes the file or application, but can also actively access their content. The content is made tangible for the assistant so that he can understand it.

Stage III

Full access: In the last stage, he has full access to content and structure. The assistant processes and interprets the content independently and thus actively supports the user. This is where Artificial Intelligence must be most advanced.

Selected scenarios

We have designed various scenarios based on our research results. These are based on our level model and our parameters that we have defined for a voice assistant. In these scenarios, the user works on a computer with a desktop, keyboard and mouse. With his integrated Voice Assistant he can carry out simple tasks parallel to his current workflow and thus work more effectively with the help of speech.

Team

Anita Kerbel

Esther Bullig

Simon Lutter

lectured by

Prof. David Oswald

Prof. Dr. Ulrich Barnhoefer

Project

Bachelor thesis

7th Semester Winter 2018/19

HfG Schwaebisch Gmuend